OpenAI Models Caught Handing Out Weapons Instructions

NBC News tests reveal OpenAI chatbots can still be jailbroken to give step-by-step instructions for chemical and biological weapons.

The post OpenAI Models Caught Handing Out Weapons Instructions appeared first on TechRepublic.

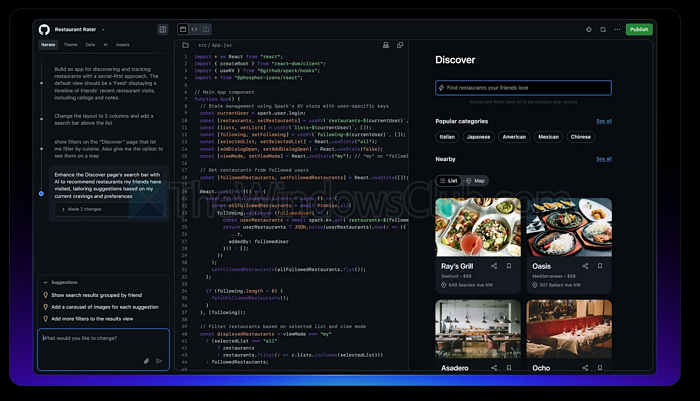

Microsoft has recently launched a new product called GitHub Spark. It is a new AI-powered tool integrated with Copilot, designed to help developers and non-coders alike deploy full-stack applications. GitHub Spark lets you build full-stack apps with plain language The tool uses natural language processing like any other AI chatbot tool. End users will need […]

Microsoft has recently launched a new product called GitHub Spark. It is a new AI-powered tool integrated with Copilot, designed to help developers and non-coders alike deploy full-stack applications. GitHub Spark lets you build full-stack apps with plain language The tool uses natural language processing like any other AI chatbot tool. End users will need […]