Comment Boston Dynamics compte construire un cerveau pour Atlas

Boston Dynamics que vous connaissez tous pour ses chiens robots tueurs de la mort, vient de sortir une vidéo de 40 minutes. Pas de saltos arrière ou de robots qui dansent mais plutôt une loooongue session où ça parle stratégie IA et vision à long terme. Et comme j'ai trouvé que c'était intéressant, je partage ça avec vous !

Zach Jacowski, le responsable d'Atlas (15 ans de boîte, il dirigeait Spot avant), discute donc avec Alberto Rodriguez, un ancien prof du MIT qui a lâché sa chaire pour rejoindre l'aventure et ce qu'ils racontent, c'est ni plus ni moins comment ils comptent construire un "cerveau robot" capable d'apprendre à faire n'importe quelle tâche. Je m'imagine déjà avec un robot korben , clone de ma modeste personne capable de faire tout le boulot domestique à ma place aussi bien que moi... Ce serait fou.

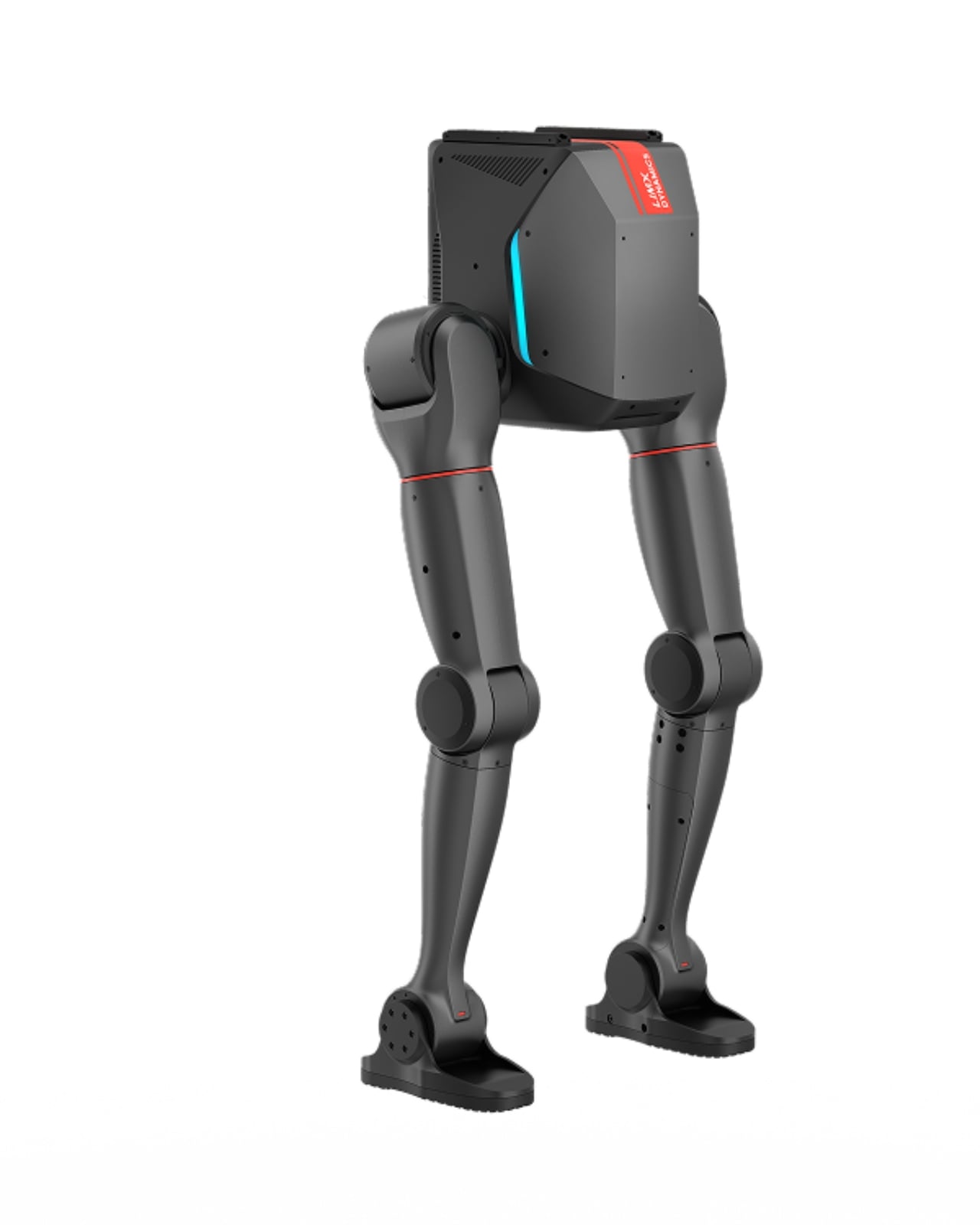

Leur objectif à Boston Dynamics, c'est donc de créer le premier robot humanoïde commercialement viable au monde et pour ça, ils ont choisi de commencer par l'industrie, notamment les usines du groupe Hyundai (qui possède Boston Dynamics).

Alors pourquoi ? Hé bien parce que même dans les usines les plus modernes et automatisées, y'a encore des dizaines de milliers de tâches qui sont faites à la main. C'est fou hein ? Automatiser ça c'est un cauchemar, car pour automatiser UNE seule tâche (genre visser une roue sur une voiture), il faudrait environ un an de développement et plus d'un million de dollars.

Ça demande des ingénieurs qui conçoivent une machine spécialisée, un embout sur mesure, un système d'alimentation des vis... Bref, multiplié par les dizaines de milliers de tâches différentes dans une usine, on serait encore en train de bosser sur cette automatisation dans 100 ans...

L'idée de Boston Dynamics, c'est donc de construire un robot polyvalent avec un cerveau généraliste. Comme ça au lieu de programmer chaque tâche à la main, on apprend au robot comment faire. Et tout comme le font les grands modèles de langage type ChatGPT, ils utilisent une approche en deux phases : le pre-training (où le robot accumule du "bon sens" physique) et le post-training (où on l'affine pour une tâche spécifique en une journée au lieu d'un an).

Mais le gros défi, c'est clairement les données. ChatGPT a été entraîné sur à peu près toute la connaissance humaine disponible sur Internet mais pour un robot qui doit apprendre à manipuler des objets physiques, y'a pas d'équivalent qui traîne quelque part.

Du coup, ils utilisent trois sources de data.

La première, c'est la téléopération. Des opérateurs portent un casque VR, voient à travers les yeux du robot et le contrôlent avec leur corps. Après quelques semaines d'entraînement, ils deviennent alors capables de faire faire à peu près n'importe quoi au robot. C'est la donnée la plus précieuse, car il n'y a aucun écart entre ce qui est démontré et ce que le robot peut reproduire. Par contre, ça ne se scale pas des masses.

La deuxième source, c'est l'apprentissage par renforcement en simulation. On laisse le robot explorer par lui-même, essayer, échouer, optimiser ses comportements. L'avantage c'est qu'on peut le faire tourner sur des milliers de GPU en parallèle et générer des données à une échelle impossible en conditions réelles. Et contrairement à la téléopération, le robot peut apprendre des mouvements ultra-rapides et précis qu'un humain aurait du mal à démontrer, du genre faire une roue ou insérer une pièce avec une précision millimétrique.

La troisième source, c'est le pari le plus ambitieux, je trouve. Il s'agit d'apprendre directement en observant des humains.

Alors est-ce qu'on peut entraîner un robot à réparer un vélo en lui montrant des vidéos YouTube de gens qui réparent des vélos ? Pas encore... pour l'instant c'est plus de la recherche que de la production, mais l'idée c'est d'équiper des humains de capteurs (caméras sur la tête, gants tactiles) et de leur faire faire leur boulot normalement pendant que le système apprend.

Et ils ne cherchent pas à tout faire avec un seul réseau neuronal de bout en bout. Ils gardent une séparation entre le "système 1" (les réflexes rapides, l'équilibre, la coordination motrice, un peu comme notre cervelet) et le "système 2" (la réflexion, la compréhension de la scène, la prise de décision). Le modèle de comportement génère des commandes pour les mains, les pieds et le torse, et un contrôleur bas niveau s'occupe de réaliser tout ça physiquement sur le robot.

C'est bien pensé je trouve. Et dans tout ce bordel ambiant autour de la robotique actuelle, eux semblent avoir trouver leur voie. Ils veulent transformer l'industrie, les usines...etc. Leur plan est clair et ils savent exactement ce qu'ils doivent réussir avant de passer à la suite (livraison à domicile, robots domestiques...).

Voilà, je pense que ça peut vous intéresser, même si c'est full english...